AI at the Edge, an Opportunity

Recently, where AI, and DeepSeek in particular, have sparked fear and awe, Synadia sees opportunity.

In late January, DeepSeek wiped billions off the market value of US tech giants. DeepSeek disrupted the paradigm we knew from ChatGPT (OpenAI), Gemini (Google) and Claude (Anthropic), to name just a few AI models. Whether it proves true or not, DeepSeek has claimed that its latest AI models are on a par with or better than industry-leading models in the United States at a fraction of the cost and are cheaper to use overall.

In fact, the cost for AI’s large language models (LLMs) with equivalent performance has been decreasing by 10x every year.

And with falling costs, Synadia sees the opportunity for AI everywhere—across clouds, on-premises, IoT environments and especially at the edge, where AI will impact users most directly.

“By 2029, at least 60% of edge computing deployments will use composite AI (combining various approaches like machine learning, predictive and generative AI), compared to less than 5% in 2023,” according to Gartner, Predicts 2025, November 2024.

Synadia – Messaging Connectivity for AI Apps at the Edge

Synadia is uniquely qualified to weigh in on how AI will build trust and unlock value in systems across industries, especially at the edge.

Synadia provides a platform for building and deploying distributed systems across any cloud, any region, on premises and at the edge. Powered by NATS.io open-source technology, Synadia’s platform focuses on three core aspects: connectivity, data and workloads, ensuring ultra-low latency, real-time access to microservices and data. It supports edge applications and breaks free of federated architectures. And it offers self-protection and strong security across different topologies.

Synadia is the ideal connectivity platform for distributed systems in the era of edge computing, IoT and AI.

Gartner predicts that by 2029, 50% of enterprises will use edge computing, up from just 20% in 2024.

Synadia is edge-native, AI-ready and runs everywhere. Here’s how.

The Synadia Tech Stack (based on three key open-source technologies: NATS, the connectivity layer; JetStream, the data layer; and Nex, the workload layer) provides everything that apps need to thrive at the edge.

Synadia also unifies AI data across cloud and edge for fast and accurate business decisions while enabling AI agents to work together seamlessly.

So, I would like to make a few edge and AI observations. Where does our confidence as pundits come from? Our customers and their experiences with the edge and AI.

1. AI Tech Stack: Focus on Inference, Not Training Massive Models

In 2025, if you’re not optimizing for inference in making predictions or solving problems, you have not considered the implications of the new paradigm. Here’s why.

The cost of achieving OpenAI o1 level intelligence fell 27x in just the last 3 months, as my Google Cloud colleague Antonio Gulli observed—an impressive price-performance improvement.

Source: LinkedIn

Source: LinkedIn

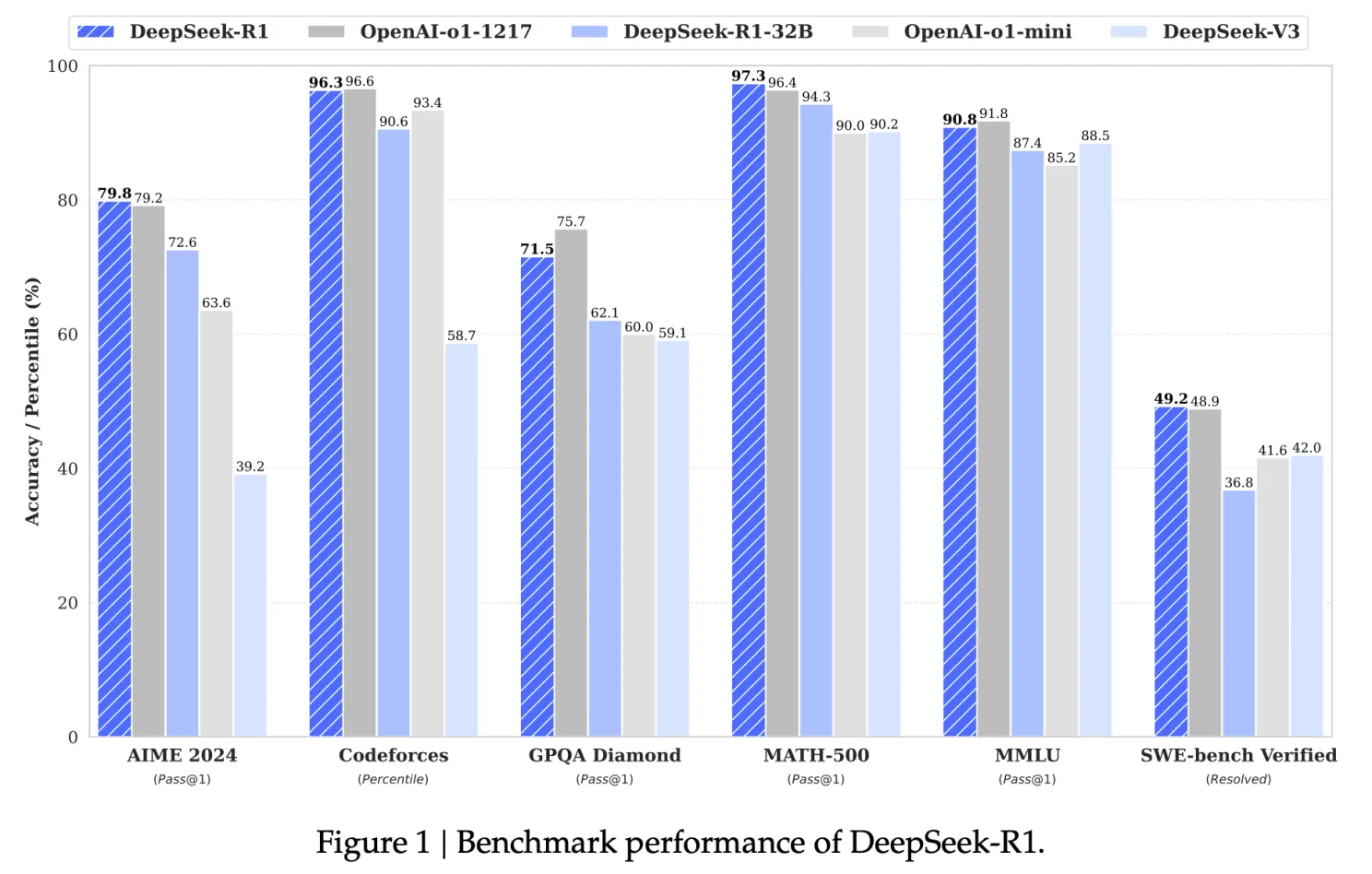

The DeepSeek AI breakthrough proves this point perfectly. Their R1 model (reportedly trained for just $5.6 million, a fraction OpenAI’s rumored $500 million budget for its o1 model), achieves feature-parity and even outperforms major competitors in key benchmarks:

Source: https://arxiv.org/pdf/2501.12948

Source: https://arxiv.org/pdf/2501.12948

No doubt companies will enhance LLMs’ capabilities, probably with smaller, purpose-built models and by re-training them with new data sets.

In terms of model training, Synadia supports flexible training workflows, whether models are local or distributed, by efficiently managing data exchange between systems.

Since LLM training is cost efficient and effective according to benchmarks, it’s also time to use the models for inference and solving problems before they occur.

Synadia accelerates inference by enabling local data processing, minimizing delays and improving responsiveness. It supports traversal across multiple models and agents—local or remote—offering flexibility for prompt augmentation, RAG workflows and model matching across any location.

PowerFlex, a subsidiary of EDF, the French utility, is leading the charge in intelligent “green” energy solutions at the edge—EV charging stations, storage batteries in large-scale commercial and residential complexes and photovoltaic systems with solar panels on rooftops across the US and in Canada. With Synadia and NATS, PowerFlex powers its intelligent fleet of EV charging stations, batteries and solar arrays at the edge-–with zero data loss. According to David Morley, Director of Software Engineering at PowerFlex, “We know a customer site is having a bad day before the customer even knows it.”

Or take Rivitt, a leader in capturing and managing machine data across various stages of oil and gas operations:

“Synadia Cloud provides reliable and real-time (in milliseconds) data from the edge for rapid decision-making and remediation, which is crucial in the oil and gas industry,” according to Miles Hill, co–founder of Rivitt.

2. AI Architecture: Adopt an Edge-First Mindset

Although the cloud’s role in AI isn’t disappearing (of course), the default is shifting rapidly towards edge-first thinking.

The pioneers in the most AI-advanced industries like manufacturing have exposed the limitations of cloud-first AI approaches. According to Gartner, 27% of manufacturing enterprises have already deployed edge computing, and 64% plan to have it deployed by the end of 2027. Why the rush to edge-first AI architectures?

In industrial applications, especially those requiring real-time control and automation, latency requirements as low as 1-10 milliseconds demand a fundamental rethinking of distributed AI system design. At these speeds, edge-to-cloud roundtrips are impractical; systems must operate as edge-native, with processing and decision-making happening locally at the edge.

One of Synadia’s most innovative customers, Intelecy, a No-Code AI platform that helps industrial companies optimize factory and plant processes with real-time machine learning insights, perfectly illustrates this paradigm shift. Their initial cloud-first approach had processing delays of 15-30 minutes. By redesigning their AI architecture for the edge, they achieved less than one-second round-trip latencies. This dramatic improvement enabled real-world applications like automated temperature control in dairy production, where ML models can provide real-time insights for process optimization.

Processing data where it is generated isn’t just more efficient—it’s becoming a competitive necessity for every industry.

3. AI Impact: Let’s Get Real

With the advent of AI, we were in awe of its model capabilities. We need to put an end to fear and awe and ask: How can we solve real business problems for people where they are impacted—in connected cars, in quality-controlled manufacturing and food processing, for example? The most compelling customer AI stories in 2025 will demonstrate measurable business impact on users and consumers at the edge.

Intelecy’s Chief Security Officer 🔐 Jonathan Camp explains how AI can help ensure quality in manufacturing: “A dairy can use a machine learning forecast model to set temperature control systems using the real-time predicted state of the cheese production process. The process engineering team can use Intelecy insights to identify trends and then automate temperature adjustments on a vat of yogurt to ensure quality and output are not compromised.”

Source: https://www.intelecy.com/norvegia-customer-stories-resources-intelecy

The shift is clear: success is no longer measured in model capabilities, but in hard metrics like revenue gained, costs saved and efficiency improved. The question isn’t “What can AI do?” but “What value did AI deliver this quarter?”

The Elephant in the Room: Can AI be trusted?

We’ve solved training costs. We’ve started to crack real-time processing. Now, the focus shifts to trust and unlocking value: Can AI deliver consistent, reliable and verifiable results at scale?

Early on, we made a claim. Synadia is uniquely qualified to weigh in on how AI will build trust and unlock value in systems across industries, especially at the edge.

Synadia contributes prompt enrichment and model collaboration for AI. Synadia supports prompt augmentation with real-time data from multiple sources, ensuring that generated outputs are contextually relevant and precise. It also enables workflows where multiple models collaborate to produce more sophisticated and high-quality results.

Ramon Bosch, CTO at Nuclia explains:

“NATS provided exactly what we needed for our distributed AI workloads. The simplicity of its event-driven architecture allowed us to focus on AI development rather than dealing with complex messaging infrastructure. For any AI company dealing with real-time data, event streaming, or distributed inference, I highly recommend NATS and NATS JetStream as the backbone of your architecture.”

Finally, there is nothing more personal than Personal AI, a revolutionary artificial intelligence platform that’s trained on the memories of individual users provided by their conversations, social media interactions and other forms of communication. Personal AI is attempting something that has never been done before—to literally create a personal artificial intelligence agent for creators and individuals to give them additional creative and intellectual powers.

Personal AI processes thousands of parallel data streams to create custom AI models in < 25 minutes, leveraging NATS and NATS JetStream for scalability, cost efficiency and the security needed for highly sensitive personal data running in applications at the edge.

Clearly, we are moving towards an era of agentic AI where AI-made decisions will be automatically implemented by chains of AI-functions. Are you ready?